Access to reliable and secure cloud platforms has become crucial in our connected society. The data centre has become the beating heart of virtually every application we use today: from automotive to industrial and smart home automation. Without near-instant connectivity to and from the server room, everything stops. Monitoring and maintaining a constant supply of stable and efficient energy to the vast racks of computing and storage equipment underpins a data centre’s uptime, a vital measure of overall performance. This article investigates the energy supply to data centres, highlights the need for power quality monitoring and how to establish the building blocks of data centre infrastructure management (DCIM).

Our dependence on data centre availability

We’ve become wholly reliant on data centre access in just under two decades. Cloud connectivity has become omnipresent whether we’re at home streaming a movie, listening to our favourite music, or asking our smart speaker assistant to turn the heating up. The same reliance applies if we’re in our car, using a navigation app to plan our journey to avoid delays ahead. Our factories and offices depend on constant internet access to manufacturing, process control systems, and corporate business applications. Retail outlets are on the front line of data centre access, without which you are unlikely to get your favourite coffee brew.

As consumers, our access to always-on connectivity is often taken for granted. Behind your smartphone, computer, and digital assistant, there is a long chain of telecoms infrastructure that leads to and from the server rooms. Apart from the apparent high-bandwidth data networks required to keep everything working, the next most crucial utility needed is electric power.

Data centres consume significant amounts of electricity, and in keeping with global trends, considerable effort is underway to ensure the data hub’s equipment and infrastructure are as energy efficient as possible. Availability or “up-time” is a vital metric indicating data centre performance. Within the data centre industry, the “five nines” goal of 99.999% availability equates to an actual downtime of approximately 5 minutes per year, or under a second per day: a staggering statistic.

To achieve such impressive reliability, servers, storage, and network infrastructure equipment are duplicated to build redundancy. Unfortunately, this further increases the complexity of power system design in the high availability data centre.

The critical power architecture of a modern data centre

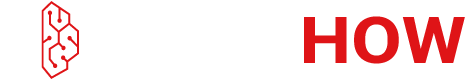

The typical primary power supply functions used in data centres are illustrated in Figure 1, highlighting the power path from the utility power provider to the critical data servers and storage racks.

In the case of power outages of the utility grid, backup generators are crucial for any data centre with automatic transfer switches (1 in Figure 1), continuously sensing the provided power for a likely abnormality or impending failure and taking immediate action to start up and switch across to the backup supplies. Switchgear (2) performs a vital role in isolating and protecting the data centre from the primary grid and distributing generator power. Inline uninterruptible power supplies (3) accommodate power sags and bridge between primary grid failure and the emergency generators coming online. UPSs protect critical equipment from harmonic distortions, switching transients, and frequency variations. The final part (4) of the power systems architecture is the power distribution racks that deliver reliable power to the vital loads.

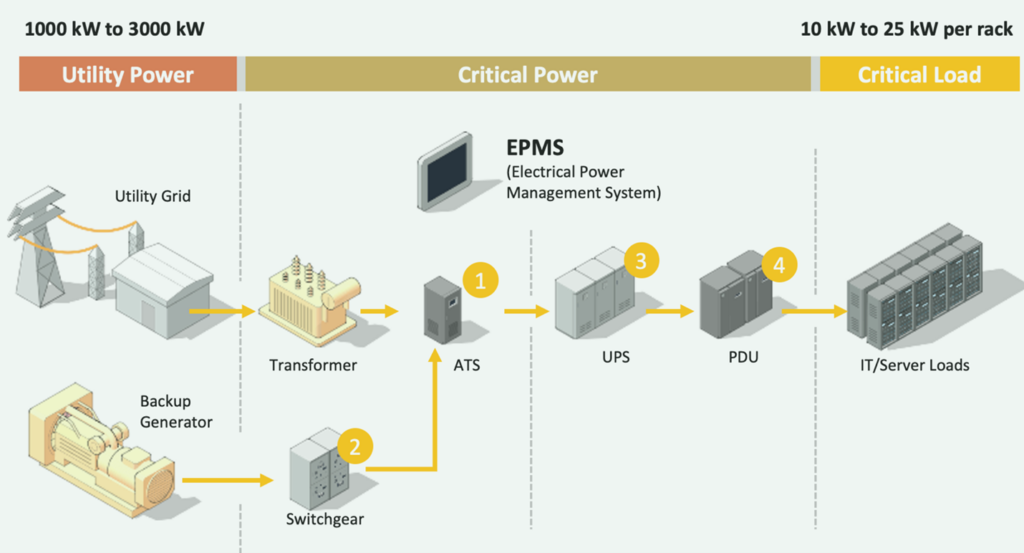

Data centre equipment generates significant heat and keeping everything cool maintains system reliability. Figure 2 highlights the typical equipment used to facilitate heating, ventilation, and air conditioning (HVAC) of the equipment halls and racks. In the large data infrastructures, these types of equipment will be monitored and controlled by building management systems (BMS).

The power and cooling infrastructure must be continuously monitored and optimised to achieve an energy-efficient data centre that uses less energy and is more sustainable for the environment. A data centre information management (DCIM) system with an integrated energy management system (EMS) and building management system (BMS) is essential for critical power monitoring purposes.

Monitoring data centre infrastructure and power quality with one integrated network

Establishing a comprehensive DCIM requires every part of the data centre’s power plant – from power distribution, cooling, UPS, and electrical switchgear – to be connected to a single control room application. A resilient and secure network underpins a successful DCIM implementation with multiple network appliances such as Layer 2 and 3 Gigabit Ethernet Switches, Serial to Ethernet Modbus Gateways, remote IOs and terminal servers.

Moxa is a world leader in industrial computing and critical network infrastructure solutions that enable connectivity for Industrial Internet of Things (IIoT) deployments and data centres.

Moxa products designed for DCIM implementations include serial to Ethernet Modbus gateways and remote I/O. A Modbus gateway example is the MGate MB3170/MB3270 series of 1 and 2-port Modus serial to Ethernet (10/100BaseTX) gateways (Figure 3). Capable of connecting up to 32 Modbus TCP servers and up to 31 or 62 Modbus RTU/ASCII slaves, the series is ideal for bridging between the operational technology (OT) and information technology (IT) environments typically encountered in power and cooling management implementations. Moxa MGate is a proven product which been installed in more 10,000+ data centre’s switchgear all over the world to acquire the digital meter’s data to DCIM.

Suitable for larger-scale DCIM deployments, the MGate MB3660 series of 8 and 16-port redundant Ethernet Modbus gateways (Figure 5) is accessible by up to 256 Modbus TCP clients and can connect up to 128 Modus TCP servers.

An example of a 2-port remote IO is the ioLogik E1200 series (Figure 4). The E1200 series supports user-definable Modbus TCP slave addressing, the RESTful API protocols for IIoT applications, and two Ethernet ports capable of upstream and downstream daisy-chaining. A total of six protocols are accommodated, including SNMP, RESTful, Moxa MXIO, Modbus TCP, EtherNet/IP, and Moxa AOPC.

Five nines data centre availability with energy-efficiency begins with redundant design and DCIM

The cloud comes to earth in a data centre. All the heavy lifting of machine-learning deep neural networks, high-capacity media streaming, and safely navigating your next car journey all depend on the high levels of data centres availability. To achieve these goals and operate an energy-efficient and sustainable facilities, implementing a data centre information management system is vital. Moxa provides industrial-grade network infrastructure equipment that connects the OT and IT worlds to achieve a reliable and robust connectivity solution for DCIM.

Distrelec is a Moxa authorised partner.